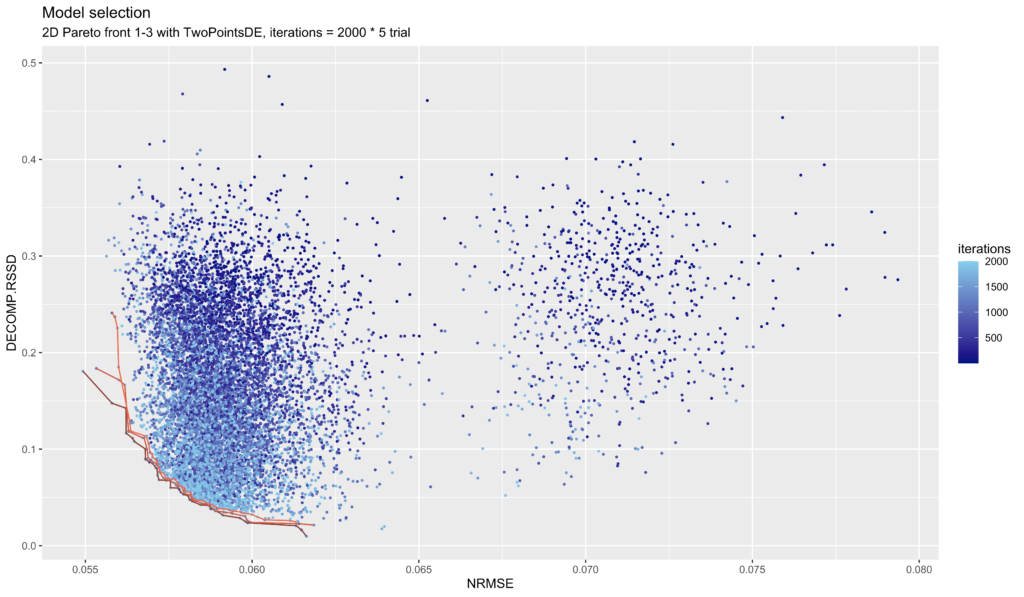

Most people aren’t aware that Facebook (Meta) invented a new ‘accuracy’ metric. Their own documentation calls it a ‘major innovation’ of Robyn, their Marketing Mix Modeling (MMM) library. It’s both genius and controversial.

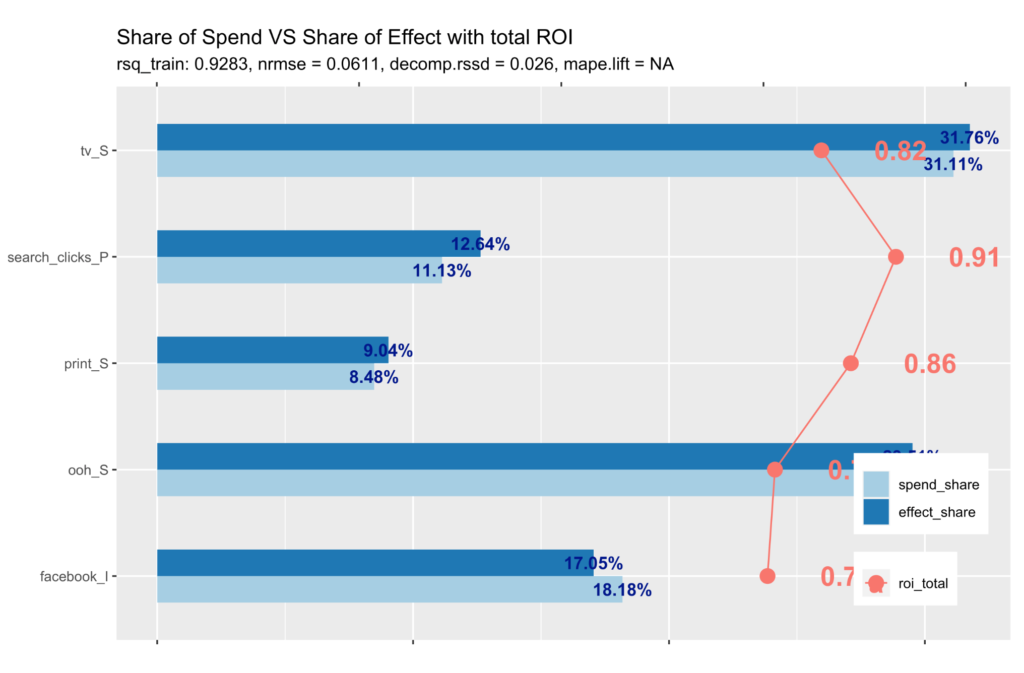

The metric we’re talking about is “Decomp RSSD” and it’s a measure for how much the model agrees with your current budget allocation. As Robyn is open-source, we get to see exactly how they calculate it.

decomp.rssd = sqrt(sum((effect_share-spend_share)^2))

Decomp RSSD Meaning:

It’s short for “decomposition root sum of squared distance” and it’s Facebook’s way of accounting for business logic. If you’re spending 90% of your budget on TV and the model says actually Facebook ads drove 90% of your revenue… the model is probably wrong.

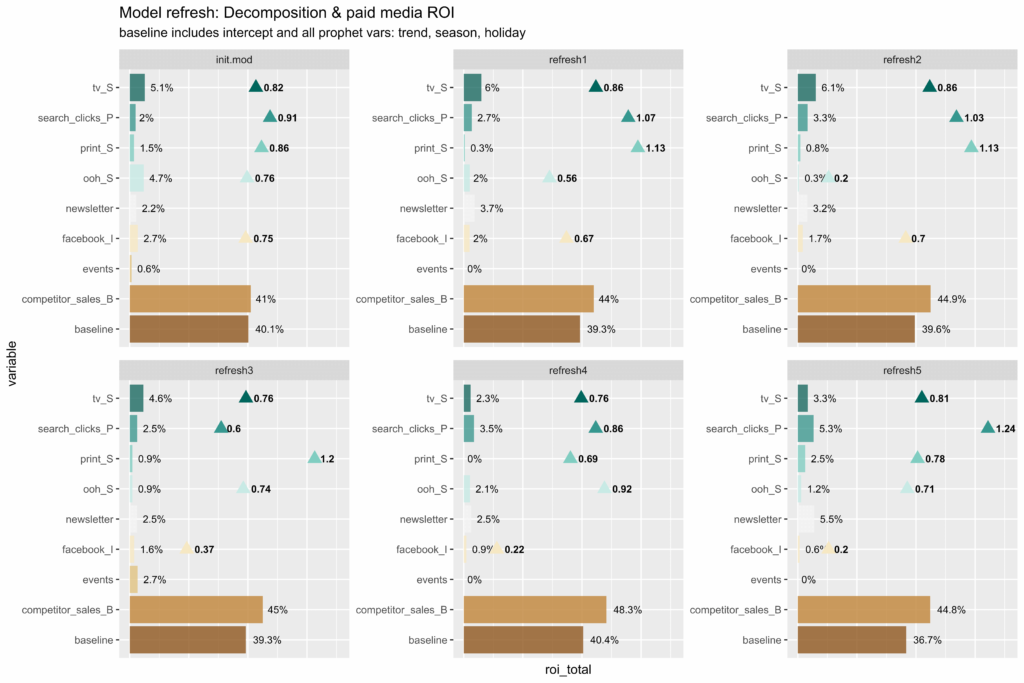

Every experienced modeler takes the ‘plausibility’ of the model’s results into account, which is why domain knowledge is so important in MMM. As Robyn builds then selects from 10,000 models automatically, they needed a mathematical way to express ‘this model is unrealistic’.

However, that creative way of codifying ‘realistic’ at scale, is exactly what makes this metric so controversial.

The whole point of marketing attribution is to find where you’re overspending on poor performing channels, so you can reallocate your budget and maximize return on ad spend (ROAS). Yet in using Decomp RSSD as one of the 2 core metrics they optimize for, Robyn is by definition throwing away models that say ‘your budget was wasted’!

Like any good controversy, there are two sides to the story. So I’ll argue both sides and let you decide whether or not you’ll incorporate Decomp RSSD in your modeling process.

Argument For:

Talk to any marketing mix modeling expert and they’ll tell you, their process involves looking at the model coefficients to decide if the model makes sense. Decomp RSSD is just automating this manual process so it can happen at scale, saving you hundreds of hours.

It’s common to find models that have very high ‘accuracy’ (it’s good at predicting data points it hasn’t seen before), but with parameter values that are extremely unlikely. For example due to multicollinearity (multiple variables being correlated with each other) you may encounter a model that tells you Facebook ads drove negative revenue – a highly unlikely scenario unless your ad was so bad it convinced people not to buy!

The most ‘accurate’ model in the world won’t influence business decisions if executives don’t believe the results. What’s better: the ‘correct’ model that gets ignored, or a model that gets you approval to move 10% of the budget?

Nobody in leadership wants to hear they’ve been wasting 90% of their marketing budget, even if it’s true. The politically savvy move is to return a model that says “we’re doing ok, but we can do a little better”. When you reallocate the budget and run the model again, it’ll have more data to recommend a more aggressive reallocation, this time to a trusting audience after that initial win.

Sure, optimizing for politics doesn’t sound very scientific, but we’re running a business, not a lab. Ignoring how decisions actually get made in organizations is a mistake many technical people make, and you absolutely can lose your job over a controversial model.

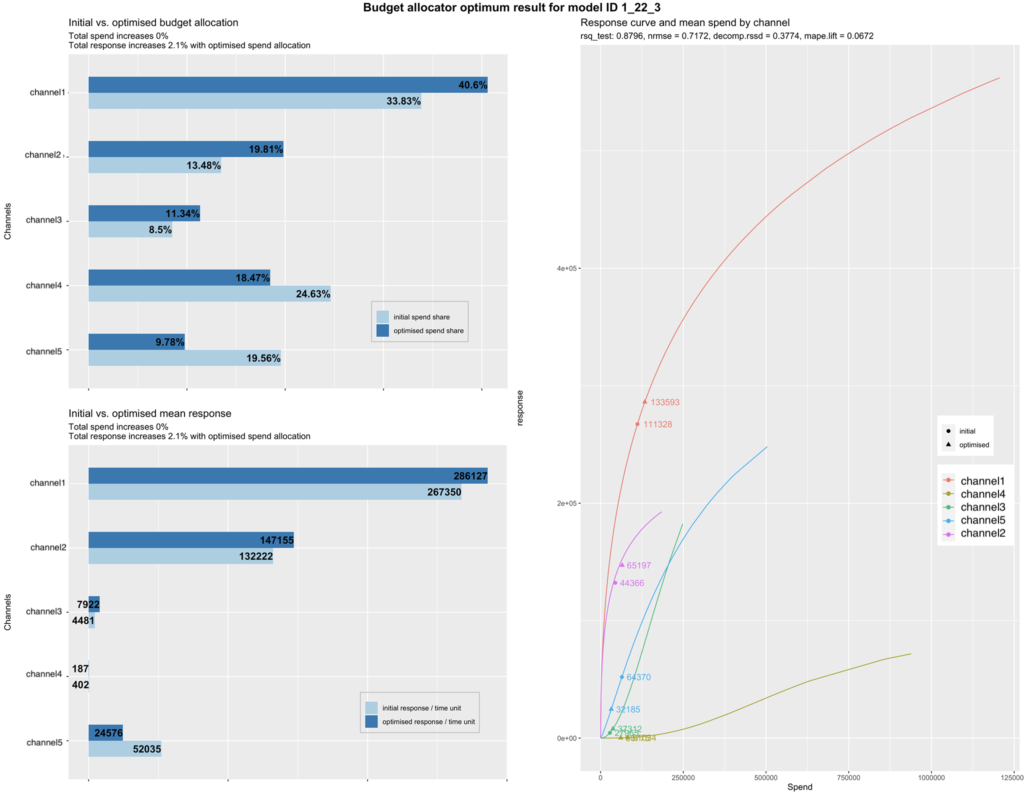

Besides, when you run Robyn you’ll see it still recommends big changes to budgets, despite optimizing to RSSD. Often it’s bold enough to say a channel drove zero return, and should have its entire budget reallocated away! Radical recommendations like this are more credible when you know Robyn did everything in its power to assume your initial budget allocation was correct.

We have to remember that MMM as a technique can’t really find the ‘truth’. The reality is that “all models are wrong, some are useful”. If Decomp RSSD can help us be less wrong by getting rid of extreme results, then the final analysis will be a lot more useful. If you combine it with model calibration from incrementality tests then you’re getting as close to the truth as possible.

Anway what’s the alternative? Are you going to manually choose between 10,000 models yourself? Or just eyeball the results of a handful of models and risk missing out on something much better? Decomp RSSD is a gift to the MMM industry, and it’s here to stay.

Argument Against:

I’m not going to argue against incorporating business logic into MMM: it’s essential. There are just better ways to do it. Bayesian techniques let you incorporate business logic into your model explicitly and more flexibly.

If you know a marketing channel isn’t likely to drive negative revenue, you can just tell your model that through Bayesian priors. It won’t even explore that territory when doing simulations, making the whole model building process more efficient. This can be a more stable approach to refreshing a frequentist model, where you’re not guaranteed as much consistency in model parameters over time.

Of course ‘all models are wrong’, but what makes them useful is if they’re consistent with what we know, not just similar to our budget allocation. Bayesian models let you incorporate all of your domain knowledge and other attribution methods in one place, and run many thousands more simulations to find a reality that’s most similar to what you’ve observed.

MMM is just one of the three pillars of marketing attribution and if you use a modeling method that ignores other attribution techniques, it’ll be hard to build one central source of truth for decision making. You’ll be stuck forever caveating decisions and going round in circles, saying things like “Robyn says Snapchat ads drove no revenue, but we know from survey data 10% of customers say they clicked on a Snapchat ad, yet the Snapchat pixel is saying it drove 20% of our sales, but when we ran an incrementality test last year it was 15%…” – good luck convincing leadership to trust you with marketing budget.

It’s not enough to know that you spent the right amount on a channel last quarter, you need answers to strategic questions like “how much to spend on Black Friday?” With Robyn’s approach to seasonality, you’ll end up with a curious answer: that marketing performance is the same in each season. That’s because Robyn controls for seasonality rather than modeling its impact. Follow this advice and blindly trust a model optimized with Decomp RSSD, and you’ll be underspending during your peak period when demand is the highest!

Even if you do believe your current budget allocation is most likely correct, and you’re sold on Decomp RSSD as a metric, you’re actually not truly optimizing for that with Robyn. Their Nevergrad optimization algorithm that uses Decomp RSSD is only applied to the model’s hyperparameters: adstocks and saturation rates. The core algorithm used by Robyn is standard Ridge Regression, which only considers accuracy.