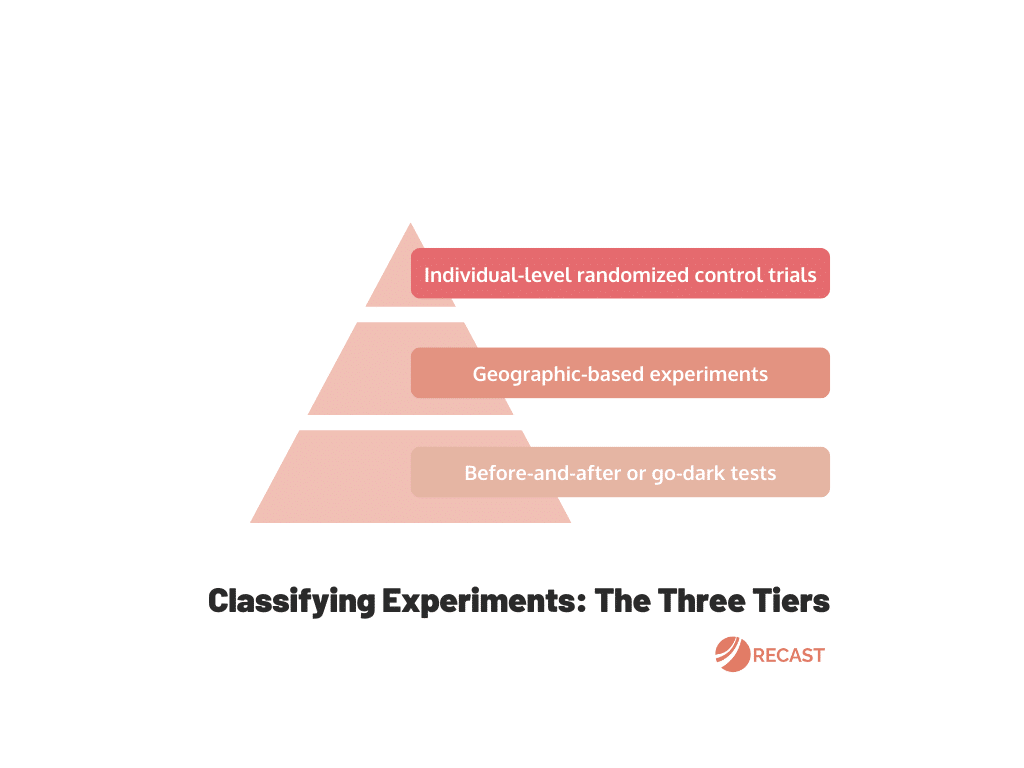

Many marketers have been turning to experiments to help them get a true read on incrementality for their marketing channels. However, it’s important to be aware that there’s a hierarchy of the evidence you get from different types of experiments.

Classifying Experiments: The Three Tiers

We think about three different types of experiments:

- Individual-level randomized control trials

- Geographic-based experiments

- Before-and-after or go-dark tests

Individual-Level Randomized Control Trials: The Gold Standard

The best-case scenario is an individual-level randomized control trial where you have a group of people, randomly divide them in two, show real ads to one group and “fake” ads to the second group, and then compare conversion between the two groups. These types of trials can be very difficult and expensive to perform (since you have to pay for the “fake” ads you’re showing to the control group), and for many channels they’re actually impossible (TV, podcast, and radio to name a few).

Geographic-Based Experiments: The Second Tier

In the next tier, you have other types of experiments you can run, like geo-lift tests. In these tests you perform a similar process except your two groups are groups of geographies instead of groups of individuals. In the US, people often do this at the DMA or state level. While these experiments are great, they’re generally not as precise because they’re not done at the individual level and you have to make stronger assumptions about the two geographies being comparable.

The issue with geo tests is that they’re not conducted at the same granular level, which means they could lack the sensitivity needed to detect smaller but impactful effects of marketing.

Finally, geo holdout tests simply don’t work as well for some channels. Podcasts and influencers for example are difficult (impossible?) to test this way since it’s impossible to control where someone listens to them.

Geo holdout tests offer great insights and I strongly recommend brands to get accustomed to using them but it’s crucial to remember their limitations and refrain from seeing them as a silver bullet to measurement problems.

Before-And-After or Go-Dark Tests: The Third Tier

You can also do before-and-after tests where you are spending in a channel and then turn off spend or dramatically increase spend to see what happens and compare the results before and after the change.

These tests are straightforward to implement and understand. You don’t need advanced technical knowledge or sophisticated software—just a clear timeline and reliable data. They’re also cost-effective and don’t require additional spend like creating “fake” ads or investing in new geographical regions.

We recently recommended that a customer turn off spend in a channel that made up 7% of their paid marketing program and we saw that their total revenue declined <1%, which was exactly in line with Recast’s model forecasts and estimates of channel performance.

However, these tests can only give you an estimate, and not a perfect one, of incrementality and require even stronger assumptions about generalizability. They also have a seasonality, industry trends, or other external events could skew results if not taken into consideration.

An easy first holdout test for your brand can be around brand search. Knowing whether brand search is incremental for your brand or not is often a question worth tens of thousands, hundreds of thousands, or millions of dollars. A great way to test this is through a go-dark experiment: eBay, for example, paused brand search ads for 30% of their users, and found that the ads did not have a significant impact on sales, prompting a change in their marketing strategy.

Marketing Mix Modeling: Tying Everything Together

One of the strengths of marketing mix modeling is that it can help you tie all of these data points together while taking into account different levels of certainty you’ll get from each of these tests. Additionally, MMM can incorporate observational data to estimate how present-day performance might be drifting from point-in-time snapshots from experiments.

As MMM tries to find causal inference problems where there is no absolute benchmark for comparison, it is the testing phase that confirms whether the outcomes are correct. Therefore, the core issue is not merely running the model and obtaining results; rather, it’s validating the integrity of these results.

Considering Quality and Uncertainty: A Guiding Principle

Whenever you’re measuring incrementality, make sure you consider the quality of the experiment, the associated uncertainty, and how you’re going to incorporate that data with other evidence you have in order to make forward-looking decisions. This can include other forms of evidence that fall into the last tier, such as surveys and digital tracking. These can provide directional evidence about what’s performing and what’s not, but they’re definitely in the bottom tier for getting evidence about the true incrementality of those marketing channels.

We also need to remember that every marketing experiment is a snapshot in time. A test run in February gives us key insights into how a channel performed during that time, but as we move further into the year, that test’s relevance goes down. Everything evolves – your business, the creatives you’re using, the marketing platforms, and even your competitors.

So, you need to consistently run these tests and experiments to create a virtuous cycle of better marketing performance and improved model accuracy.