Marketers are in a tough spot when it comes to measurement: iOS14 made everyone conservative about tracking, performance marketers are nervous to test less measurable channels, and all their attribution methods disagree, with no single source of truth.

If there’s one thing we want to achieve at Recast is to get marketers at modern consumer brands who are only confident reporting on last-click performance to feel like they can test anything, because they can measure everything by showing that Bayesian MMM gives them one source of truth, unifying attribution methods.

This journey from traditional measurement techniques to marketing mix modeling (MMM), often filled with moments of enlightenment, doubts, and breakthroughs, is what this article aims to capture. We’ll offer insights into the process and highlight potential hurdles along the way, hoping to provide a roadmap for those embarking on this path.

The Marketing Measurement Journey: welcome aboard

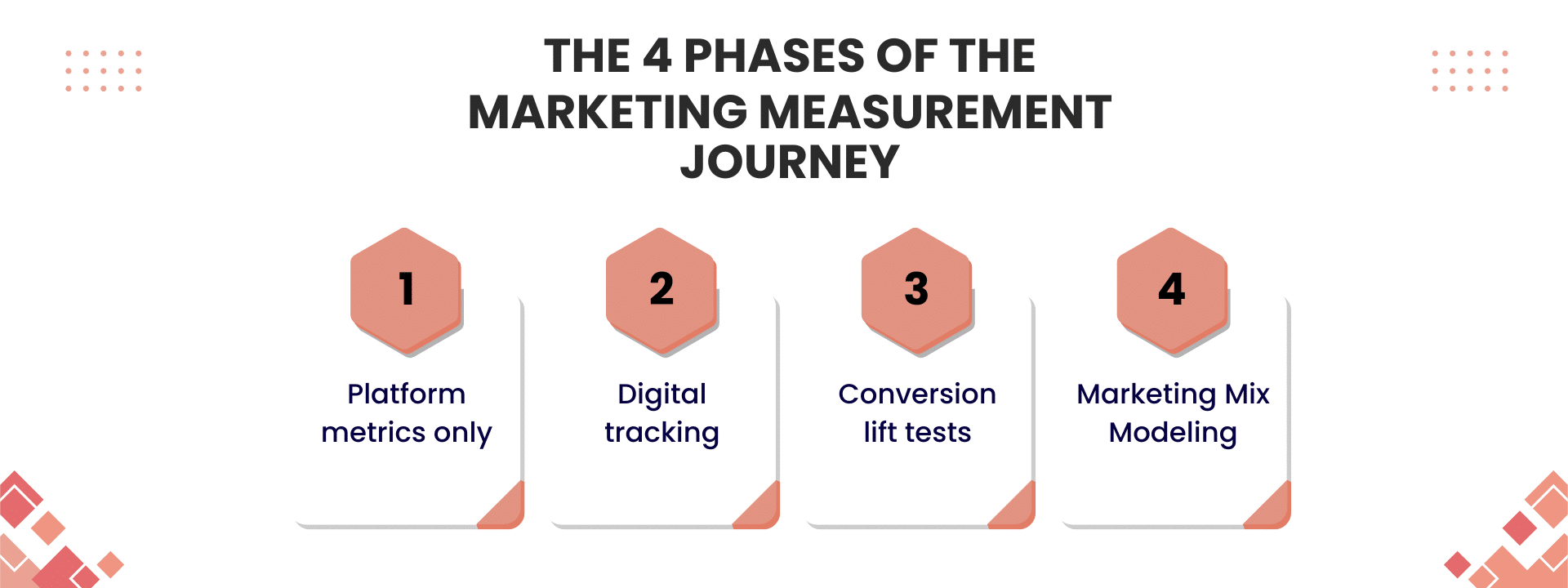

The journey we see marketers go through has four phases. Every phase has a moment of confidence, followed by doubt, and then by a “oh no..” moment. Let’s take the trip:

Phase 1: Platform metrics only:

You’re at this stage if you get all your metrics from your platforms: Meta, Google, etc. You’re also here if you have partnered with an agency and that’s all they use.

- Confidence: “This is a great ROAS and the campaigns are scaling nicely.” This might be the most blissful stage as a marketer, but soon you’ll find platform analytics unreliable.

- Doubt: “Why doesn’t FB analytics match Google Analytics?” You start to see that platforms report differently and you don’t know which one you should be using as reference.

- “Oh no…”: “Customers from ads are getting misattributed as organic.” The gravity of platforms misreporting starts to dawn as you realize you can’t allocate your budget with confidence. And so you jump to…

Phase 2: Digital tracking

The default for online marketing, where browser cookies (or similar identifiers) allow advertisers to track the marketing source of their customers. You’re here if you use last touch only, some form of multi-touch, or maybe even a post-checkout survey for the most advanced.

- Confidence: “We use machine learning to do data-driven attribution.” You think you can track everyone across the buying journey, and you might have even added a third party vendor to make sure. You trust them, for now.

- Doubt: “Do view-through conversions deserve that much credit?” You’re starting to see digital tracking isn’t always correct, and you question how reliable MTA is when assigning credit to each touchpoint.

- “Oh no…”: “People are Googling for discount codes before checking out…” You know you have no better way of measuring how incorrect MTA is. You feel like we’re flying blind. You see more privacy regulations coming. And so you jump to…

Phase 3: Incrementality

You’re here if you run conversion lift tests to validate what you’re seeing from the platforms and analytics. These tests can be geographic-based experiments, go-dark tests or, less commonly, individual-level randomized control trials.

- Confidence: “Our lift tests tell us our ads are 60% incremental.” You’re now focused on incrementality. You run experiments, you get insights back, and you trust them blindly. How could a test be wrong?

- Doubt: “We ran a lift test but I’m not sure if our agency executed it correctly and the results were really different from what we expected.” You realize running tests is not as simple as it looks. They’re also expensive. You also know they’re only a snapshot in time.

- “Oh no…”: “We just realized that our instrumentation was incorrect and all of our historic lift test results were incorrect. We don’t have the right team internally to do this work.” You question whether you have the system in place to run experiments and get burned by tests that don’t bring back any learnings. And so you jump to…

Phase 4: Modeling

You’re here if you use MMM (through a consultant, a vendor like Recast, or in-house) to find which channels in your mix deserve credit for sales and to reallocate your budget to the highest-performing channels.

- Confidence: “We use a proprietary model, custom built to our needs.” You think you’ve finally figured it out: you know how to balance digital tracking, lift tests, and statistical models to make the best marketing decisions you can.

- Doubt: “It seems like we need a better media mix model but our internal data scientists don’t understand marketing and I can’t tell which vendors to trust.” Neither custom-made or open-sourced models are good enough. You look for vendors, but how do you know which one is best? MMM is hard…

- “Oh no…”: “These MMM results don’t make any sense and can’t possibly be right. My boss keeps asking me to true up the results but it all feels like black-box snake oil.” The results you get are not trustworthy and you either ignore them or follow them to make skewed budget-allocation decisions. You can’t validate them.

So, what happens next?

Two things:

First, you realize the core problem of MMM is not running a model and simply getting results – it’s actually validating that those results are correct. At Recast, we take model validation very seriously because we don’t want to just yield “interesting insights” but, rather, we want our partners to use it to drive actual budget changes and efficiency improvements. And then, we can actually use those budget changes to test and validate the models results, leading to a virtuous cycle of better marketing performance and better model performance.

And secondly but tied to this idea – you start triangulating. You see that no method has the whole truth and that you need to use digital tracking, conversion-lift tests and MMM in conjunction. That seems to be the final stop in the journey… for now, at least.

PS. If you’re interested in seeing what phase some of the top consumer brands are in, we’ve built a database providing unique insights into their measurement methods – check it out.