There’s a lot of confusion from marketers about the right way to use lift tests or incrementality experiments to calibrate the results of a media mix model (MMM). We see questions like:

- Should I use experimental results from other brands or products to calibrate my MMM?

- What if I have multiple different experiments, how do I choose which one to use?

- Won’t experiments just bias the MMM’s results?

You need to be thoughtful when incorporating information from incrementality experiments into an MMM, so in this post we’ll:

- Talk a little bit about the theoretical relationship between incrementality experiments and MMMs.

- Answer commonly asked questions.

- Then share some guidelines for the appropriate way to do calibration.

Why Calibrate?

The goal of marketing measurement is to understand incrementality: the true causal relationship between marketing activity and a conversion activity. Companies use both media mix models and incrementality experiments to measure this causal relationship. Media mix models, if they work, are incredibly useful in that they can measure marketing performance across all channels simultaneously and they can be used for planning and forecasting exercises.

However, media mix models are extremely complex, assumption-heavy observational statistical models. In general that means that they’re much more likely to be wrong than to be right and that all MMM results should be assumed to be wrong until they’re proven correct. Within-model metrics like R-squared, statistical significance, in-sample MAPE, etc. cannot be used to determine if an MMM is correct.

Incrementality experiments, when they’re well run, require fewer assumptions to make claims of causality. Most economists and statisticians agree that deliberate experiments are a better way of measuring causality than observational models. However, they can be expensive to run (in dollar cost or opportunity cost) and most companies run them infrequently.

So, in theory we want to use the high-signal experimental results to help us improve the media mix model – this can then be used for forecasting, scenario analysis, and for measuring channels that are more difficult to experiment with. That gets us the best of both worlds!

Common Questions

So broadly, it seems like calibrating an MMM with experimental results makes sense. But practically, how do we integrate those results?

Whether you’re doing the calibration with priors in a bayesian model or via some other penalization method in a non-bayesian model, there are some questions that you might face:

Should I use experimental results from other brands or other products to calibrate my MMM? What about “industry standards”?

In general, this is not a good idea. Every brand is different, and just because some media channel works well for some other brand doesn’t mean it works well for yours. Really, you only want to calibrate a media mix model with experiments that line up exactly with the marketing channel and product combination you’re including in the media mix model.

What if I have multiple different experiments, how do I choose which one to use?

The best practice is to include all of the experiments in the media mix model accounting for both their uncertainty as well as the time when the experiment was run (more on the best practice approach below). However, if your MMM framework is not capable of incorporating experimental results in this way, best practice would be to summarize the full range of the results that are compatible with the experiments and use that for calibration.

For example, if you have three incrementality experiments you’ve run with the following results:

- 90% uncertainty interval between 3.5x ROI and 5x ROI

- 90% uncertainty interval between 2x ROI and 4x ROI

- 90% uncertainty interval between 3x ROI and 7x ROI

and you believe that each of these experiments is equally valid from a methodological perspective, then it would make sense to calibrate the MMM with an ROI ranging from 1x to 8x. That covers the full range of results from your experiments plus a little bit extra to account for uncertainty and presumed measurement error.

If the experiments are of varying quality, then you will have to use your judgement to combine the results into a reasonable range to use to calibrate the media mix model.

Aren’t the experiments just biasing the results?

Yes! But that’s exactly the idea. As we discussed above, incrementality experiments are generally more trustworthy than a media mix model on its own, so we want the experimental results to shape the results from the media mix model. If you don’t trust the experimental results, then you shouldn’t be using them to calibrate!

Best Practices

The best way to use incrementality experiments to calibrate a media mix model is by using a Bayesian modeling framework with time-varying covariates. This allows you to:

- Take into account the time-bounded nature of experiments

- Incorporate the uncertainty of experimental results

- Incorporate evidence from multiple experiments

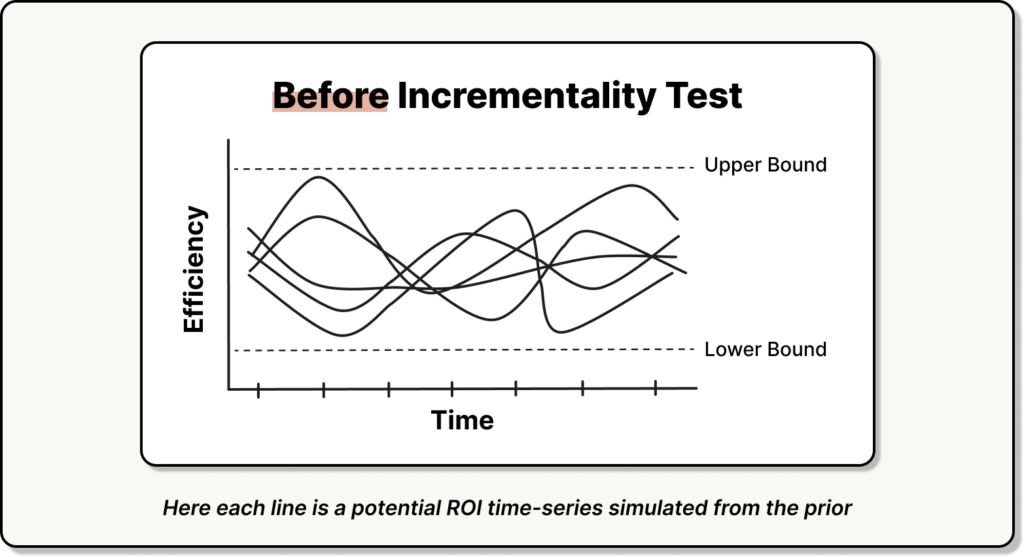

The way this should work is that you should use broad, uninformative priors as the default for every marketing channel. Then, you can apply the results of the incrementality experiment (including the uncertainty!) only to the time periods when the incrementality experiment was run.

In practice, you might have uninformative priors for ROIs that change over time that look like this:

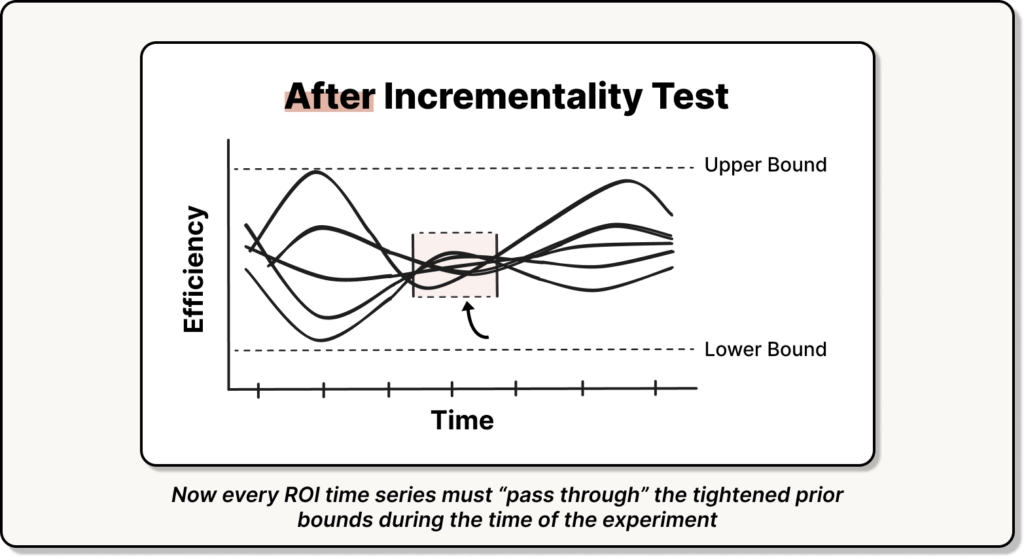

Then once you include the incrementality experiment result, you get priors that look like this:

This approach is theoretically correct and allows for the appropriate propagation of uncertainty as incrementality experiments become “stale” while still allowing them to influence the results of the MMM.

You can read more about how Recast can incorporate incrementality experiments into an MMM at this link.