Every few years, a new media channel rises and leaves marketers wondering how it fits in their current channel mix, how to test it, and how to measure it.

Think about TikTok in its early days – few brands had spent on the platform, and there was no historical data to help predict how it might perform.

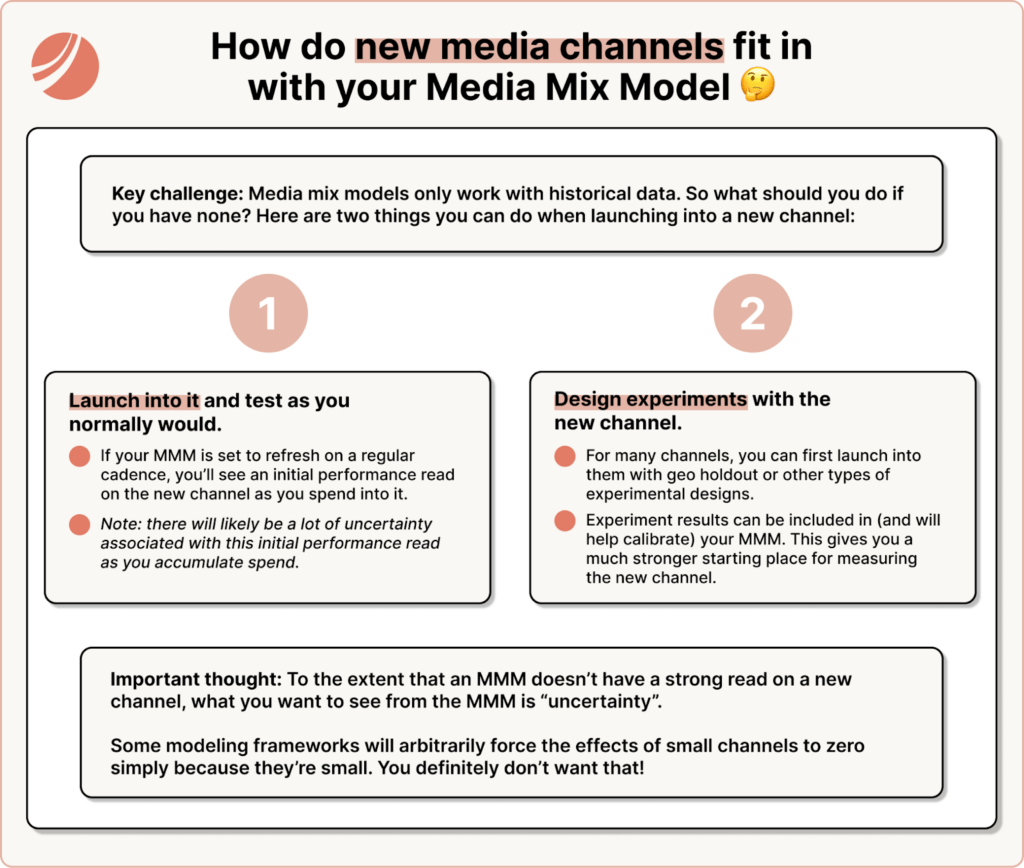

For marketers using media mix models (MMMs), this is a unique challenge. MMMs rely on historical data to measure performance and forecast future outcomes. Without that data, how can you determine if a new channel is incremental?

It’s a hard problem on one side but it’s actually pretty straightforward.

In this article, we’ll explore:

- How MMMs handle new channels without historical data.

- Testing methods to measure incrementality for new channels.

- What role uncertainty plays in this and how to deal with it.

- How to iteratively optimize new platforms as they mature.

1. New Channels Require Data to Measure Performance

At its core, an MMM needs data to function. Without historical data, the model can’t immediately provide insights into a new channel’s effectiveness.

Like we were saying, when TikTok first emerged, no one could predict how it would perform because no brands had spent on it yet. MMMs aren’t magical—they rely on observing past behavior to forecast future outcomes.

The first step to integrating a new channel is generating data by making a meaningful investment. A large enough bet allows the model to detect whether the channel is driving incremental revenue or new customers.

Here’s why this works:

- Before your spend, the channel’s contribution is zero.

- A significant increase in spending creates a clear signal, helping the model see whether there’s a corresponding lift in sales, traffic, or other KPIs.

- Smaller bets, on the other hand, are often too subtle to provide actionable data. If your spend on a new channel isn’t enough to create enough signal, the MMM won’t be able to differentiate between noise and actual performance.

Ideally, your MMM is set up to refresh on a regular cadence so that you’ll see the performance of that channel as you start to spend on it.

2. Test and Experiment

No one can tell how a channel will perform before you spend into it. Even if you look at a similar brand to yours, their performance in that channel may not be related to the performance you can expect to see.

You need to actually get into the channel, start testing, and see how it works for your brand. We know that sounds simple, but this is your most reliable way to measure incrementality and understand its impact.

There are two we tend to recommend:

Geo-holdout tests

Geo-holdout tests involve running a campaign in select regions while holding out others as a control group.

For instance, if you’re launching TikTok ads, you might target three states while leaving three others untouched. By comparing performance metrics like revenue or new customer acquisition between the test and control regions, you can isolate the channel’s impact.

This method works well because it accounts for external factors like seasonality or promotions, and it helps you attribute results directly to the new channel.

Dynamic spend tests

Dynamic spend tests involve making large, temporary shifts in your spending to see how performance changes.

For example, you could double your TikTok budget for two weeks and track whether there’s a corresponding spike in conversions. It was all zeros for all of its history, and now there’s a big increase in marketing spend that will help us see if it changes the total amount of revenue or the total amount of new customers that we’re getting. Alternatively, you could pull back spending entirely to see if performance drops.

Thinking through this idea of “what’s a bet we can make that will give a signal” is built into how we at Recast think about how marketers should approach this problem. And we have developed tools that actually help marketers systematically think about this: “what’s the right size of bet to make on this new channel that has a lot of uncertainty currently?”

We think about Recast as a tool for combining different types of evidence (both experimental and observational) and it’s really useful to have a clear and consistent framework for thinking about how to generate signal on incrementality even when a Randomized Control Test isn’t possible.

3. Embrace Uncertainty

When you’re dealing with a brand-new channel, it’s important to acknowledge what you don’t know. A robust MMM should reflect this uncertainty instead of hiding it and forcing premature conclusions.

For example:

- If TikTok spend is new and limited, the model might show a wide range of possible outcomes for its impact. This isn’t a failure – it’s an honest reflection of the limited data available.

- Some MMMs arbitrarily reduce the impact of small channels to zero, which can also be misleading.

If you have uncertainty, go run an experiment! It’s a hypothesis within your incrementality system that you need to go and validate. And then, as more data is collected over time, the model’s confidence will naturally improve.

TL;DR: Building a Strategy for Measuring New Channels

You won’t have a perfect system right away with new channels. What you’re looking for is a framework that helps you hypothesize, test, validate, learn, and consistently iterate.

- Start with a meaningful spend to generate a clear signal for the MMM.

- Use experiments like geo-holdouts or dynamic spend tests to isolate the channel’s impact.

- Expect wide confidence intervals at first and use them as a guide for further testing.

- Collect data, refine your approach, and optimize your spend as the model learns more about the channel.